Navigating Bias, Displacement, and Accountability

Key Takeaways:

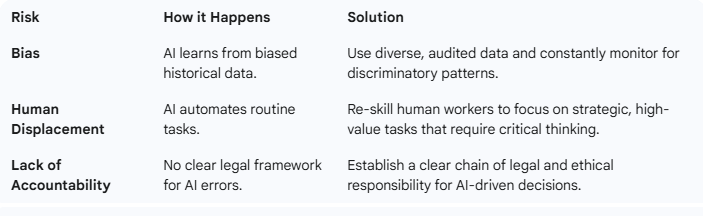

The biggest risks of using Contract AI are bias, human displacement, and a lack of clear accountability. While this technology promises efficiency, it can also amplify existing inequalities if not implemented responsibly. AI models are not neutral; they learn from the data they are trained on, and if that data is biased, the resulting contracts will be too. This can lead to unfair or discriminatory outcomes.

What is Bias in Contract AI and How Does it Happen?

Bias in Contract AI occurs when the AI system learns and perpetuates unfair or discriminatory patterns from its training data. This happens because the historical contracts used to train the model may reflect human biases related to race, gender, or socioeconomic status. For example, an AI could be trained on a dataset where men were historically offered higher-value contracts, causing the AI to continue this trend.

The result is an automated system that automates discrimination, not just efficiency. This can lead to legal challenges and a complete breakdown of trust. It’s a problem that can only be solved by a commitment to fair and diverse data.

How Can Human Displacement Be Managed?

The displacement of human jobs is a major concern with the rise of Contract AI. Roles like paralegals, contract managers, and junior lawyers are at risk as AI automates their tasks. The key to managing this is not to stop progress but to pivot.

Instead of seeing AI as a replacement, we should view it as a partner. Legal teams can be retrained to focus on higher-level tasks while AI automates tedious or repetitive tasks. This legal expertise includes interpreting complex legal situations, negotiating face-to-face, and providing strategic advice that AI cannot. Our experience has shown that AI is best as a tool to augment human expertise, not replace it.

Why is Accountability a Challenge with Contract AI?

Accountability is a challenge with Contract AI because it is often unclear who is responsible when the AI makes a mistake. Unlike a human lawyer, an AI system cannot be held liable for negligence. This creates a legal and ethical vacuum.

Is the software developer responsible? Is it the company that implemented the AI? Or is it the data scientist who trained the model with flawed data? Without a clear legal framework, clients and stakeholders may have no recourse if an AI-generated contract contains a critical error. Establishing a clear chain of responsibility is crucial for building trust.

Call to Action: Learn more about responsible AI implementation and how you can ensure your organization is prepared to navigate these challenges. Book a free demo of CobbleStone’s award-winning AI-based contract management solution today!